Interrogating AI Bias through Digital Art

Interrogating AI Bias through Digital Art

ABSTRACT

This field review leverages books, essays, academic journal articles, and a recent review of an emerging field to understand how racial bias in early photographic and film techniques carried over into deep (machine) learning and facial recognition artificial intelligence (AI), which has led to harmful technological designs. This review also discusses how contemporary artists incorporate AI technology in their creative work to both raise awareness about and help mitigate the harmful effects of unconscious bias. The review concludes by identifying challenges and offering recommendations for different stakeholders, including artists who may not be scholars, art scholars, practitioners, students, or educators.

Keywords: Artificial Intelligence; Racial Bias; Deep Learning; Facial Recognition; AI Art; Activism

History of Racialization/Bias in Photography and Film

Implicit bias in the development of photographic misrepresentations of racialized bodies has led to misrepresentations that preserve an ideology that views Black and Brown people as inherently inferior to white people (Hostetter 2010). During the analog era of photography and film, from the mid-nineteenth century to the late twentieth century, human faces and color charts were used to adjust the way film was developed and processed. These references were created using the faces of white women and, as a result, people with darker skin were often poorly rendered and underexposed. For example, in the mid-1950s Kodak created the Shirley Card, named after a former Kodak studio model, which became the standard for color calibration in photo labs all over the world (Roth 2009). Color film lab workers used image tests or “leader ladies” selected for their skin tones and hair color as part of a broader set of practices establishing whiteness or lighter skin as the default (Gross 2015). As a result, lighter skin became the baseline for all color film, centered on the needs and desires of a target mainstream audience and dominant culture (Dyer 1997; Lewis 2019). This color film system, with its existing racial biases, was simply carried over into digital images (McFadden 2014).

Photographic systems attempted to create a universal or neutral standard for all subjects, yet the norm ended up being white skin. In 2013, London-based artists Adam Broomberg and Oliver Chanarin brought attention to this issue in their work To Photograph the Details of a Dark Horse in Low Light for which the title referred to a “coded phrase used by Kodak to describe the capabilities of 1980s film stock that addressed a persistent dilemma in the photographic industry: how to adequately capture and render dark skin.” They installed billboard advertisements that juxtaposed Shirley Card images with tonal and color scales that were overlaid with the label “normal.” The artists also used a Polaroid ID-2 camera with film stock from the 1950s–1970s to show how the expired film could not properly render dark skin (Smith 2013). As a result, photographers have to use a special camera feature to lighten their subjects. The development of imaging technology that is not suited for more diverse skin types is not just confined to photography; this limitation can also be found in film production.

Films produced in the mid-twentieth century, such as Jean Renoir’s The Golden Coach from 1952, show how the history of color calibration in photography—with its chemistry of inherent racial bias—was carried over into analog images. Renoir used a color film process that was standard at the time but rendered invisible the darker facial features of Black people. Scholars and cultural critics counter the notion that perceived skin bias in color film is unintentional or unconscious (Benjamin 2019; Lee 2020; Lewis 2019). According to Lorna Roth (2009), these deficiencies in film and photography were initially developed with a “global assumption of ‘Whiteness’” (117) embedded within their systems. Over time, this has robbed Black and Brown people of the pleasure of recognizing themselves in photography/film and thus feeling proud of their images. Additionally, racial bias in camera-based systems raises concerns that the damages of misidentification are disproportionately concentrated on marginalized and vulnerable populations. Advancements in digital technology have not corrected this flaw because in low light, digital video still fails to adjust for multiple skin tones in an image. As a result, photographers and filmmakers have to put in extra labor to create adjustments or “fixes,” such as adding additional lighting to calibrate film for darker skin tones (Benjamin 2019, 104).

Photographic systems attempted to create a universal or neutral standard for all subjects, yet the norm ended up being white skin.

Bias in Software Development

The racialization of photography and film carried over into computer-generated imagery (CGI). Researchers, such as Theodore Kim (2021), have identified racial bias embedded in CGI software that emphasizes the hegemonic visual features of Europeans and East Asians. In the industry, the use of the skin tones and hair colors of Europeans and East Asians were the default standards in the development of CGI characters. Kayla Yup (2022) cites Kim who notes how developers created algorithms—step-by-step instructions for computers to follow—as a way to generate “human skin” that, according to Kim, is “synonymous with calling pink bandaids ‘flesh-colored’.” Researchers (Kim et al. 2021, 1) have explored how this perspective supports seemingly neutral practices in CGI that have resulted in measurably biased outcomes. The research looks at publications that present dark skin as a deviation from a “white baseline,” further reinforcing white supremacy.

One example of racial bias in CGI is software that gives computer-generated models a specific “skin glow” effect, which is commonplace in the industry (Borshukov and Lewis 2005). This effect is called subsurface scattering, and it allows light to penetrate the surface of translucent objects (like skin, milk, or marble); the light is then scattered by the interaction of these objects with other materials (Jensen et al. 2001). This process gives computer-generated objects a glow similar to that seen in seventeenth-century paintings like Johannes Vermeer’s Girl with a Pearl Earring, which shows how light softens and glimmers on the subject’s facial features.

According to Kim (2021), skin glow from subsurface scattering mutes or dulls the features of darker skin. In fact, it is “specular reflection” or shine that gives dark, brown-skinned subjects—like the ones in artist Kehinde Wiley’s large-scale figurative paintings—their character. Wiley’s approach to painting darker skin presents a greater range of chromatic possibilities (Smith 2008). Although Wiley may use analog or nondigital terms to describe his painting process, his portraits can serve as guides for CGI developers on how to use “specularity” (see Weyrich et al. 2006) rather than a scattering skin glow. The shine effect on dark skin creates the illusion of dimension; Wiley uses intensely concentrated paints and backlighting to emphasize the dimensionality of his subjects instead. To mitigate the impact of subsurface scattering in CGI, researcher Theodore Kim (2021) suggests that software developers need to adjust the algorithmic processes to apply specular reflection to computer-generated characters. The development of algorithms in AI image generation shows promise in this area.

The Rise of AI in Digital Art

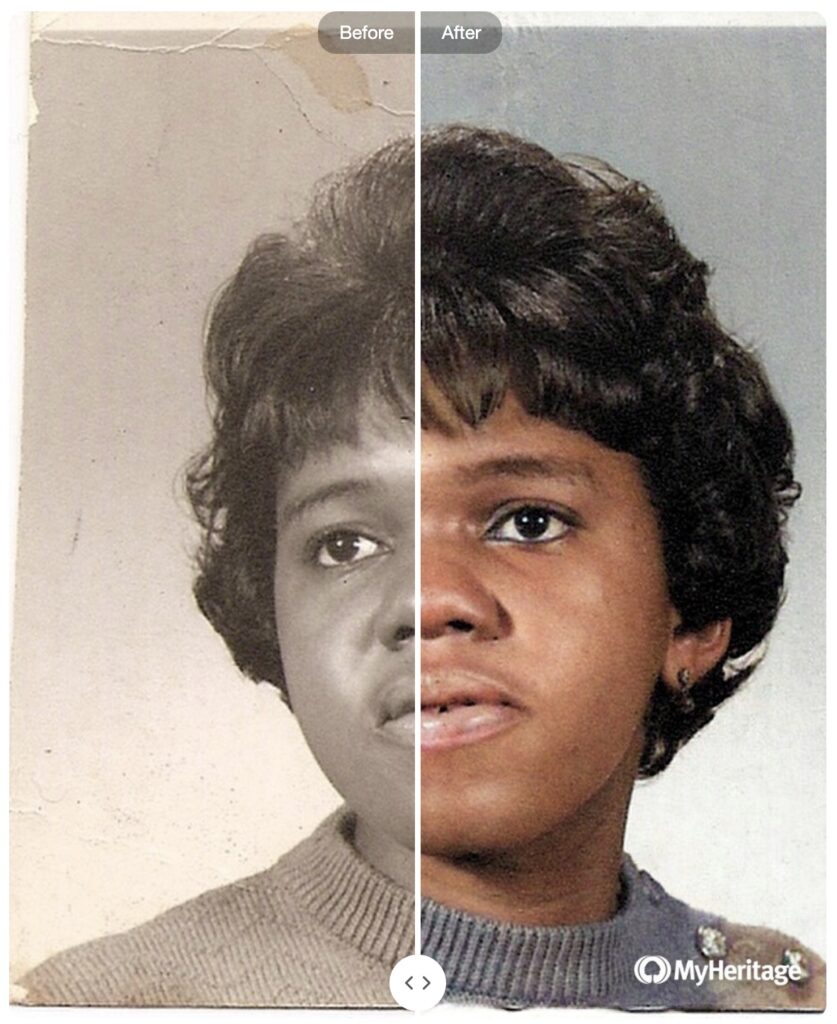

Emerging AI technology has the potential to replicate some of the processes used by artists when creating portraits. AI-driven software is now being developed to help artists refine facial details, colorize, or enhance photographs and films. This is done through deep (machine) learning—a subset of AI—that is inspired by the human brain to aid machines (computers) with intelligence without explicitly programming them (H, G, and P 2019; Miller 2019). For example, a tool called DeOldify uses an algorithm to train a machine to identify and process fourteen million images to help analyze how objects in the world are colored (Simon 2020). It applies this knowledge to old black-and-white films using a generative adversarial network (GAN), a kind of deep (machine) learning AI that generates images and other media based on an underlying distribution of data. The data includes human faces, songs, cartoon characters, text, and movie posters.

Another deep learning tool artists use is called “image style transfer,” which renders new images using different styles (Gatys, Ecker, and Bethge 2016, 2421; Hertzmann 2018; Miller 2019). The software can discover the details of a source photo, combine its contents with styles of different images, and then transfer those styles to the original image. In other words, a digital photograph or video can be re-created in the distinctive style of a Van Gogh painting. An example of this art is the FUTURES exhibition at the Arts and Industries Building, Smithsonian, in which each “featured futurist” portrait combines original photos of people—Buckminster Fuller, Alexander Graham Bell, Isamu Noguchi, Helen Keller, and Octavia Butler among them—with style images gathered from their contributions to the world (Hickman 2021). For example, the portrait of civil rights leader Floyd McKissick featured in the FUTURES exhibit was generated using plans (as source style images) for Soul City, a community first proposed by McKissick in 1969.

Artists use GANs and image style transfer to collaborate or work with AI. This interaction allows artists to figure out how much they want the AI to intervene in their process, controlling exactly how much the algorithm reconstructs their images from the input (photos) and custom style images. The extent of the intervention depends on the subject. Artists can use AI to move away from the use of universal equations to simulate skin, helping improve the way digital humans are rendered, and giving Black and Brown people more positive ways to view themselves. Image style transfer gives artists like me a wider range of possibilities for enhancing darker skin. For example, my Gilded series explores specularity with AI. For each portrait, I added shine effects from vintage gold and bronze tapestries (as image styles). This approach was used to create a mural-sized portrait to celebrate the life of Greg Tate, a preeminent Black American cultural critic, author, and musician (Madondo 2022). Soon, specularity in addition to scattering skin glow will be an industry standard—Photoshop now has a neural (GAN) filter—and the output will be of higher quality (Sawalich 2021).

The Bias in and Harms of Facial Recognition in AI Today

AI technologies such as deep (machine) learning and facial recognition allow for the extraction of a wide range of features from images. However, face-analyzing AI has been shown by Joy Buolamwini (see Cohen 2017) to be biased. Facial recognition AI is designed to draw out certain features and detect patterns in faces, with the aim of classifying them. The software is trained to automatically identify different aspects of faces and tell us if the faces belong to the same people or not. This is referred to as “pattern matching.” Different images of the same person help make a close match, whereas if you add in images of a different person, the output will be very different (Noor 2017). The problem with this system is that it has been shown to falsely identify Black and Asian faces 10 to 100 times more often than white faces (Grother, Ngan, and Hanaoka 2019). The software also falsely identifies women more than it does men—making Black women particularly vulnerable to algorithmic or AI bias. This brings us back to the earlier use of Shirley Cards in photography and underscores how old racial biases persist because of how imaging systems are calibrated or trained.

Face-analyzing AI systems work significantly better for white faces than Black ones (Buell 2018). In 2018, researchers Joy Buolamwini and Timnit Gebru found that if the person in a photograph was white and male, then the systems guessed correctly more than 99 percent of the time. On the other hand, the systems failed to identify Black women 50 percent of the time. The reason for this is that most face-analysis programs are trained and tested using databases of hundreds of pictures, which are overwhelmingly white and male (Buolamwini and Gebru 2018). White male developers may not be aware of the implicit bias being built into these tools. As a result, people with darker faces do not register accurately in facial recognition AI that analyzes the facial expressions of job applicants, for example; in surveillance systems that use AI to identify people in crowds; or in systems used by law enforcement to scan driver’s license photos. This means that if you happen to have darker skin, the technology is much less accurate, leading to more mistakes (like mistaken identity and racial profiling), which disproportionately affect Black and Brown people. Indeed, Joy Buolamwini, a Ghanaian Canadian computer scientist and digital activist, discovered that the software was more likely to correctly identify her if she was wearing a painted white mask than when she used her own (darker) face (Lee 2020).

Facial recognition AI negatively impacts systemically powerless and historically marginalized identities. AI bias can have a detrimental impact on Black and Brown communities even when the use of AI is altruistic.

Facial recognition AI negatively impacts systemically powerless and historically marginalized identities. AI bias can have a detrimental impact on Black and Brown communities even when the use of AI is altruistic. According to Ruha Benjamin (2019) computer programming languages (codes) and algorithms can “act as narratives” that reaffirm existing inequalities and “operate within powerful systems of meaning that render some things visible, others invisible, and create a vast array of distortions and dangers” (7). For example, Benjamin (2019, 50) shows how the 2016 Beauty.AI pageant, based on a machine learning algorithm, strongly preferred contestants with lighter skin, choosing only six nonwhite winners out of thousands of applicants, leaving its creators confused about the evident racial bias. This contest that was judged by machines was supposed to use objective factors, such as facial symmetry and wrinkles, to identify the most attractive contestants. As with Buolamwini’s white mask test, the Beauty.AI robot simply did not read people with darker skin. The robot sorted photos that were labeled or tagged with information on specific facial features, and thus the AI was encoded with biases about what is, or what defines, beauty.

Even when facial recognition is not biased, it can be harmful because of how it is deployed (that is, against whom and for what reasons). Social justice groups such as the Algorithmic Justice League (AJL) explore the trade-offs between the risks and benefits of complex facial recognition technologies. The purpose is to create better, more diverse face databases for development and testing (Learned-Miller et al. 2020). AJL offers a primer for nontechnical audiences to increase their understanding of AI terminology, applications, and testing (Buolamwini et al. 2020). The primer clarifies the precise meanings of face-analyzing projects, such as AJL’s, that can be used as guideposts for diverse readers, artists, and educators who wish to interrogate algorithmic/racial bias in AI, as well as highlight the harms these systems can cause. AI technologies such as deep (machine) learning and image style transfer will allow people to build their own data sets, train new AI models, and create work to fight back against oppressive AI technology.

Corporations often reinforce the misconception that algorithms are neutral when, in fact, algorithms are created by people who carry biases and prejudices. These corporations use data that implicitly includes biases and prejudices. Racial bias in AI is not unique to facial recognition. Safiya Noble (2018), a scholar whose research focuses on the design of digital media platforms on the internet and their impact on society, posits racial bias in online search engines, such as Google, to “uncover new ways of thinking about search results and the power that such results have on our ways of knowing and relating” (71). For example, when Noble used the search phrase “black girls,” she discovered that all the top results led to porn sites. She concluded that search engines “oversimplify complex phenomena” and that “algorithms that rank and prioritize for profits compromise our ability to engage with complicated ideas” (118).

Facial Recognition and Other AI in Art (and as Resistance)

Art has been used to address bias in AI that often serves as a technological gatekeeper for industrial complexes wherein businesses are entwined in sociopolitical systems that may profit from maintaining socially detrimental systems. Although Google has recently begun to work with people with dark skin to improve the accuracy of facial recognition technology (Wong 2019), Black users of apps like Lyft and Uber have had to wait longer than their white counterparts for rides (Houser 2018). To counter this, artists are using the same technologies to reclaim their agency and to counter repressive or oppressive technology through activism, art, and education (Gaskins 2019). For example, Stephanie Dinkins (Blaisdell 2021) and Amelia Winger-Bearskin (Jadczak 2016) use AI to interrogate biases by applying rigorous artistic analysis—determining what features are conveyed and why artists use them—to computer data. They create and use algorithms to establish new artistic processes that show how artworks are performed, presented, or produced. This includes the use of AI art and portraiture that represents people from diverse communities.

Contemporary artists, designers, and activists incorporate AI technology in their work to mitigate its harmful effects. They use machine (deep) learning to raise awareness of the social implications of technology through research of facial recognition AI that, by itself, reduces humanity to numbers or data. According to a report by the National Endowment for the Arts (2021), today’s artists are developing and deploying algorithms that drive AI in an effort to interrogate its increased use in the world and how this use impacts people’s daily lives. For example, Kyle McDonald, a media artist, intertwines coding and art to highlight how artists can use new algorithms in creative and subversive ways. McDonald uses machine learning to create his own systems such as Facework, an app that “auditions” users for jobs based entirely on how they look. The goal of the app is to “expose a world that only computer scientists and big corporations get to see” (Wilson 2020). Facework is McDonald’s commentary on the increased use of facial recognition AI in popular devices such as iPhones and surveillance cameras, Google, and social media apps.

Contemporary artists, designers, and activists incorporate AI technology in their work to mitigate its harmful effects.

Other examples that use this approach include ImageNet Roulette—an AI classification tool created by artist Trevor Paglen and AI researcher Kate Crawford (Crawford and Paglen 2019) in response to modern AI that research has proven to be created by and for specific people. Paglen is cited to have said that the tool is flawed in that it classifies people in “problematic” and “offensive” ways (Statt 2019). The results reveal how little-explored classification methods yield “racist, misogynistic and cruel results” (Solly 2019). Artists have been developing tools for stymieing facial recognition. For example, Jip van Leeuwenstein developed a lens-type mask that distorts the face yet allows other people to see the wearer’s facial expressions. Adam Harvey created computer vision (CV) dazzle makeup and Hyperface, a type of camouflage that reduces the confidence score of face-analyzing AI by providing false faces that distract AI algorithms.

Centering Marginalized People in AI Art

AI art from historically marginalized groups troubles the systems and processes that technology such as biased search engines (Noble 2018) and facial recognition (Buolamwini and Gebru 2018) renders invisible. For example, Nigerian American artist Mimi Ọnụọha’s mixed media installation Us, Aggregated 2.0, gives agency to women of color by using Google’s reverse-image search algorithms that have categorized and tagged images with the label “girl.” The work presents photographs of women in a cluster of frames, each with an image of a mother at the center. The cluster is intended to evoke a feeling of family and community, which belies the fact that they are randomly sorted and put together by an algorithm that labels them all as similar. sava saheli singh’s #tresdancing is a short film that critiques AI, online testing software, social media, gamification, and the weaponization of care. The main protagonist is a Black Canadian girl who is forced to ramp up her engagement with a new, experimental technology to make up for a failing grade. Both projects address bias in AI technology using popular tools.

Mozilla is funding several projects by Black artists from around the world that spotlight how AI reinforces or disrupts systems of oppression or celebrates cultures. This includes Afro Algorithms, a short animated film by Anatola Araba Pabst that imagines a distant future where AI technology and machine learning are part of everyday life. The filmmaker’s intent is to spark “conversations about race, technology, and where humanity is driving the future of this planet” (Pabst 2021). The film’s main protagonist, Aero, is an AI-driven robot who realizes that important voices and worldviews are missing from her databank, including the experiences of the historically marginalized and oppressed. Other Mozilla-sponsored projects include Johann Diedrick’s Dark Matters, an interactive web experience that addresses the absence of Black speech in training data sets. Tracey Bowen’s HOPE is a documentary and immersive experience that follows the artist and civic tech entrepreneur’s journey to establish the United Kingdom’s first Black-led task force to tackle AI bias and discrimination.

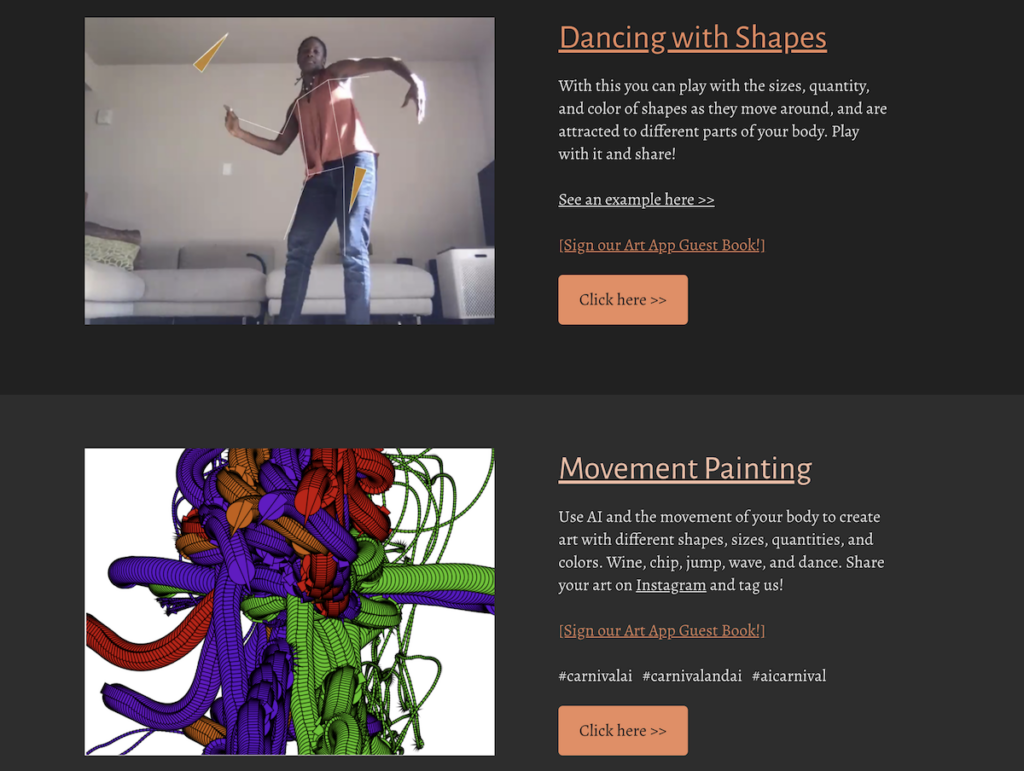

Carnival AI, another Mozilla-sponsored project, was created in response to a creative strike by Black TikTok performers who were frustrated by the misappropriation of their dances by white influencers. These influencers seldom give credit to Black creators, who are often marginalized (Onwuamaegbu 2021). The team responsible for developing Carnival AI—Vernelle Noel, Valencia James, and me—presented an online app that uses a deep learning model to generate imagery based on Caribbean dance performance data (King 2021). This was done by using an AI technique called “pose estimation” or PoseNet that looks at a combination of the dancer’s pose and the orientation of the same dancer in physical space. Participants submitted short videos of their Carnival dances that were then processed using AI. In Rashaad Newsome’s Assembly visitors encounter “Being, The Digital Griot,” a nonbinary, nonraced AI who transcends categorizations while invoking culture, storytelling, and dance performance (Schwendener 2022). “Being” teaches dance and continuously generates and recites poetry based on the work of queer poets, feminist authors, and activists. Both Carnival AI and Assembly use AI to center voices and cultures of the African Diaspora.

Building Community Capacity Through AI Art

Stephanie Dinkins leads an initiative that makes space for a community of artists, programmers, and activists. AI.Assembly invites members to brainstorm ideas for AI prototypes (Martinez 2020). Projects by member artists such as Ayodamola Tanimowo Okunseinde help foster a sense of identity, ownership, and empowerment so that other creative people can gain greater control over future development in AI. Okunseinde’s Incantation critiques AI and machine learning algorithms as “languages of exclusion, challenging people to reconsider the ontologies and predictive power of such systems” (Fortunato 2020). In this workshop, participants paint glyphs based on the theme of community. The AI analyzes this cultural data, which is transmitted to a touch-based, or haptic, body suit.

Hyphen-Labs commissioned COMUZI to explore the sociocultural implications of AI and its effect on intimate relationships. The project facilitated a workshop in which participants looked at how cultural background influences peoples’ relationships with AI and pushes for equity in the development of new technologies. Projects like COMUZI show how artists work with others to disseminate research and design more racially equitable futures and a more human-centered AI. The artists featured here are part of a growing cohort who are finding institutional and foundational support to create projects that address current trends in AI, creating stories that are often speculative and predominantly about people from their communities.

Through art, practitioners work with their communities to explore myriad ways to move toward a world with more equitable and accountable AI. The workshops, films, and exhibitions make people more aware of the issues with AI such as algorithmic (implicit) bias, selective surveillance, and failures in facial recognition technology, engaging them in conversations or performances, and coming up with actionable steps to address the problems. These activities will culminate in the creation of more human-centered AI that differs from the current AI used by the private sector.

Through art, practitioners work with their communities to explore myriad ways to move toward a world with more equitable and accountable AI.

Conclusion: Challenges and Recommendations

Racial bias can move from one system to the next, especially when diverse sources of information and data are not considered at the development phase. Bias in analog photographic and cinematic systems included the use of tools, such as Shirley Cards, to calibrate or train cameras. The result was the production of racial biases that were “baked” into the history of digital art and media (Kim 2021). Bias was overlooked when developing computer-generated imagery that washed out the features of darker-skinned people. More recently, biased data has been used to train AI systems like facial recognition software. As a consequence, artists have worked to fix the issue through creative production. Their work shows how taking a more inclusive and human-centered or empathetic approach to AI development can move issues related to racial bias away from the “biological and genetic systems that have historically dominated its definition toward questions of technological agency” (Coleman 2009).

Researchers such as Theodore Kim et al. (2021) have proposed that software developers recruit or engage more diverse stakeholders to ensure equity and fairness in AI. They assert that no one is better positioned to create algorithms that capture the subtle qualities of darker skin than the people who see these features in the mirror every day. Work by Ruha Benjamin (2019), Safiyya Noble (2018), and Joy Buolamwini and Timnit Gebru (2018) aims to bring more awareness of bias in emerging AI technologies such as deep (machine) learning and facial recognition. Artists are using these technologies to address issues such as bias created through misrepresentation and training data that is used to teach machines. Artists often experiment with the possibilities of uprooting racial bias from its roots; in recent times, several have used AI to enhance or build on their work. Examples of this can be found in works that identify and create new uses for AI that center marginalized voices and help build community capacity through workshop facilitation.

New avenues of research and creative work are urgently needed because oppressive algorithms in AI are being used more and more in society. For example, biased algorithms are used in applications that draw on millions of photographs from databases widely used to train AI. These algorithms have the potential to amplify existing inequalities. While researchers seek to reveal the underlying logic of how images are used to train AI systems to “see” the world, artists are receiving institutional and foundational support to engage people in conversations about bias in AI through exhibition, performance, websites, films, and app development. There is much at stake: AI can be trained to promote or discriminate, approve or reject, even render people visible or invisible. AI tools are used to maintain or withhold power, with real-world consequences.

Thus, these tools must be interrogated, and we must have wider public discussions about the consequences of their continued use. Researchers like Gebru are leading artists, scholars, activists, legislators, technologists, and others to reimagine and “reshape ideas about what AI is and what it should be” (Perrigo 2022). They are calling for change to avoid further harm to vulnerable communities, moving people toward a future where they feel empowered or to reimagine constructions of race (class, gender, and so on) but also show how collaboration and community-building can lead to more liberatory constructions of AI for society in general.

Recommended Readings

Benjamin, Ruha. 2020. Race after Technology: Abolitionist Tools for the New Jim Code. Cambridge, UK: Polity.

Buolamwini, Joy, and Timnit Gebru. 2018. “Gender Shades: Intersectional Accuracy Disparities in Commercial Gender Classification.” Proceedings of the 1st Conference on Fairness, Accountability and Transparency 81: 77–91. http://proceedings.mlr.press/v81/buolamwini18a/buolamwini18a.pdf.

Kim, Theodore, Holly Rushmeier, Julie Dorsey, Derek Nowrouzezahrai, Raqi Syed, Wojciech Jarosz, and A.M. Darke. 2022. “Countering Racial Bias in Computer Graphics Research.” In Special Interest Group on Computer Graphics and Interactive Techniques Conference Talks (SIGGRAPH ’22 Talks). ACM, New York, NY. https://doi.org/10.1145/3532836.3536263.

Kim, Theodore. 2020. “The Racist Legacy of Computer-Generated Humans.” Scientific American, August 18, 2020. https://www.scientificamerican.com/article/the-racist-legacy-of-computer-generated-humans/.

Miller, Arthur I. 2019. The Artist in the Machine: Inside the New World of Machine-Created Art, Literature, and Music. Cambridge, MA: The MIT Press.

References

Agrawal, Raghav. 2021. “Posture Detection Using PoseNet with Real-Time Deep Learning Project.” Analytics Vidhya. September 5, 2021. https://www.analyticsvidhya.com/blog/2021/09/posture-detection-using-posenet-with-real-time-deep-learning-project.

Artificial Intelligence + Carnival + Creativity. 2022. “Carnival Dance + Art App.” https://carnival-ai.com/carnival-ai-app.

Arts and Industries Building. n.d. “FUTURES.” Accessed April, 6, 2022. https://aib.si.edu/futures.

Ayodamola Okunseinde. 2020. ayo.io. Accessed April, 6, 2022. http://ayo.io/index.html#.

Benjamin, Ruha. 2019. Race After Technology: Abolitionist Tools for the New Jim Code. Cambridge, UK: Polity Press.

Blaisdell, India. 2021. “Stephanie Dinkins Revolutionizes Fine Art and Artificial Intelligence.” The McGill Tribune, March 23, 2021. https://www.mcgilltribune.com/a-e/stephanie-dinkins-revolutionizes-fine-art-and-artificial-intelligence-03232021.

Borshukov, George, and J. P. Lewis. 2005. “Realistic Human Face Rendering for ‘The Matrix Reloaded.’” In ACM SIGGRAPH 2005 Courses. https://doi.org/10.1145/1198555.1198593.

Buell, Spencer. 2018. “MIT Researcher: AI Has a Race Problem, and We Need to Fix It.” Boston Magazine, February 23, 2018. https://www.bostonmagazine.com/news/2018/02/23/artificial-intelligence-race-dark-skin-bias.

Buolamwini, Joy, and Timnit Gebru. 2018. “Gender Shades: Intersectional Accuracy Disparities in Commercial Gender Classification.” Proceedings of the 1st Conference on Fairness, Accountability and Transparency 81: 77–91. http://proceedings.mlr.press/v81/buolamwini18a/buolamwini18a.pdf.

Buolamwini, Joy, Vicente Ordóñez, Jamie Morgenstern, and Erik Learned-Miller. 2020. Facial Recognition Technologies: A Primer. Cambridge, MA: Algorithmic Justice League. https://assets.website-files.com/5e027ca188c99e3515b404b7/5ed1002058516c11edc66a14_FRTsPrimerMay2020.pdf.

Cohen, Arianne. 2017. “The Digital Activist Taking Human Prejudice Out of Our Machines.” Bloomberg Businessweek, June 26, 2017. https://www.bloomberg.com/news/articles/2017-06-26/the-digital-activist-taking-human-prejudice-out-of-our-machines.

Coleman, Beth. 2009. “Race as Technology.” Camera Obscura 24 (1 (70)): 177–207. https://doi.org/10.1215/02705346-2008-018.

COMUZI. n.d. “How Does AI Augment Human Relationships?” Accessed April, 6, 2022. https://www.comuzi.xyz/hdaahr.

Crawford, Kate, and Trevor Paglen. 2019. “Excavating AI.” https://excavating.ai.

del Barco, Mandalit. 2014. “How Kodak’s Shirley Cards Set Photography’s Skin-Tone Standard.” NPR, November 13, 2014. https://www.npr.org/2014/11/13/363517842/for-decades-kodak-s-shirley-cards-set-photography-s-skin-tone-standard.

Diedrich, Johann. n.d. Johann Diedrick. Accessed April, 6, 2022. http://www.johanndiedrick.com.

Dinkins, Stephanie. n.d. Stephanie Dinkins. Accessed April, 6, 2022. https://www.stephaniedinkins.com.

Dyer, Richard. 1997. White: Essays on Race and Culture. London: Routledge.

Fortunato, Sofia. 2020. “Straight Talk: TechnoShamanism with Ayodamola Okunseinde.” SciArt Magazine, February 2020. https://www.sciartmagazine.com/straight-talk-ayodamola-okunseinde.html.

Fritz AI. n.d. “Pose Estimation Guide.” Accessed April, 6, 2022. https://www.fritz.ai/pose-estimation.

Gaskins, Nettrice. “Gilded: Art & Algorhythms.” https://www.nettricegaskins.com/gilded.

Gaskins, Nettrice. 2019. “Not Just a Number: What Cory Doctorow’s ‘Affordances’ Show about Efforts to Reclaim Technology.” Slate, October 26, 2019. https://slate.com/technology/2019/10/cory-doctorow-affordances-response-ai.html.

Gatys, Leon A., Alexander S. Ecker, and Matthias Bethge. 2016. “Image Style Transfer Using Convolutional Neural Networks.” IEEE Conference on Computer Vision and Pattern Recognition (CVPR). https://doi.org/10.1109/CVPR.2016.265.

Gross, Benjamin. 2015. “The Human Test Patterns Who First Calibrated Color TV.” The Atlantic, June 28, 2015. https://www.theatlantic.com/technology/archive/2015/06/miss-color-tv/396266.

Grother, Patrick J., Mei L. Ngan, and Kayee K. Hanaoka. 2019. Face Recognition Vendor Test Part 3: Demographic Effects. NIST Interagency/Internal Report (NISTIR). Gaithersburg, MD: National Institute of Standards and Technology. https://www.nist.gov/publications/face-recognition-vendor-test-part-3-demographic-effects.

H, Santhi, Gopichand G, and Gayathri P. 2019. “Enhanced Artistic Image Style Transfer Using Convolutional Neural Networks.” Proceedings of International Conference on Sustainable Computing in Science, Technology and Management (SUSCOM). February 26–28, 2019. http://dx.doi.org/10.2139/ssrn.3354412.

Harvey, Adam. n.d. “Critical Art and Research on Surveillance, Privacy, and Computer Vision.” Accessed April, 6, 2022. https://ahprojects.com.

Hertzmann, Aaron. 2018. “Image Stylization: History and Future (Part 3).” Adobe Research, July 19, 2018. https://research.adobe.com/news/image-stylization-history-and-future-part-3.

Hickman, Matt. 2021. “The Smithsonian Arts + Industries Building Awakens for a Glimpse of the Futures.” The Architect’s Newspaper, November 19, 2021. https://www.archpaper.com/2021/11/smithsonian-arts-industries-building-futures.

Hostetter, Ellen. 2010. “The Emotions of Racialization: Examining the Intersection of Emotion, Race, and Landscape through Public Housing in the United States.” GeoJournal 75 (3): 283–98. https://doi.org/10.1007/s10708-009-9307-4.

Houser, Kristin. 2018. “Uber and Lyft Still Allow Racist Behavior, but Not as Much as Taxi Services.” The Byte (blog), Futurism, July 16, 2018. https://futurism.com/the-byte/racial-discrimination-ridehaiing-apps.

Jadczak, Felicia. 2016. “Meet Amelia Winger-Bearskin.” She+ Geeks Out, November 1, 2016. https://www.shegeeksout.com/blog/meet-amelia.

Jensen, Henrik Wann, Stephen R. Marschner, Marc Levoy, and Pat Hanrahan. 2001. “A Practical Model for Subsurface Light Transport.” In Proceedings of the 28th Annual Conference on Computer Graphics and Interactive Technique—SIGGRAPH ’01. https://doi.org/10.1145/383259.383319.

Joury, Ari. 2020. “What Is a GAN? How a Weird Idea Became the Foundation of Cutting-Edge AI.” Towards Data Science, January 20, 2020. https://towardsdatascience.com/what-is-a-gan-d201752ec615.

Kim, Theodore. 2021. “Anti-Racist Graphics Research (SIGGRAPH 2021).” August 9, 2021. YouTube video. 54:25. https://www.youtube.com/watch?v=ROuE8xYLpX8.

Kim, Theodore, H. J. Dorsey Rushmeier, Derek Nowrouzezahrai, Raqi Syed, Wojciech Jarosz and A. M. Darke. 2021. “Countering Racial Bias in Computer Graphics Research.” ArXiv. https://doi.org/10.48550/arXiv.2103.15163.

King, Nelson A. 2021. “AI Extends Virtual Carnival Experiences.” Caribbean Life News, September 16, 2021. https://www.caribbeanlifenews.com/virtual-carnival-experiences-showcase-how-ai-can-extend-black-creativity-and-joy.

Learned-Miller, Erik, Vicente Ordóñez, Jamie Morgenstern, and Joy Buolamwini. 2020. Facial Recognition Technologies in the Wild: A Call for a Federal Office. Cambridge MA: Algorithmic Justice League. https://assets.website-files.com/5e027ca188c99e3515b404b7/5ed1145952bc185203f3d009_FRTsFederalOfficeMay2020.pdf.

Lee, Jennifer. 2020. “When Bias Is Coded into Our Technology.” NPR, February 8, 2020. https://www.npr.org/sections/codeswitch/2020/02/08/770174171/when-bias-is-coded-into-our-technology.

Lewis, Sarah. 2019. “The Racial Bias Built into Photography.” New York Times, April 25, 2019. https://www.nytimes.com/2019/04/25/lens/sarah-lewis-racial-bias-photography.html.

Madondo, Bongani. 2022. “Refiguring Tate: Exuberance in a Silent Way.” The Mail & Guardian, March 17, 2022. https://mg.co.za/friday/2022-03-17-refiguring-tate-exuberance-in-a-silent-way.

Martinez, Tommy. 2020. “Towards an Equitable Ecosystem of Artificial Intelligence.” Pioneer Works, May 15, 2020. https://pioneerworks.org/broadcast/stephanie-dinkins-towards-an-equitable-ecosystem-of-ai.

McFadden, Syreeta. 2014. “Teaching the Camera to See My Skin.” BuzzFeed News, April 2, 2014. https://www.buzzfeednews.com/article/syreetamcfadden/teaching-the-camera-to-see-my-skin.

Miller, Arthur I. 2019. The Artist in the Machine: Inside the New World of Machine-Created Art, Literature, and Music. Cambridge, MA: MIT Press.

Mozilla. 2021. “Black Interrogations of AI: Announcing Our 8 Latest Creative Media Awards.” Mozilla Foundation. https://foundation.mozilla.org/en/blog/announcing-8-projects-examining-ais-relationship-with-racial-justice.

National Endowment for the Arts. 2021. Tech as Art: Supporting Artists Who Use Technology as a Creative Medium. Washington, DC: National Endowment for the Arts.

Newsome, Rashaad. 2022. “Assembly.” March 6, 2022. YouTube video. 00:14. https://www.youtube.com/watch?v=TmV7G7NMGR8.

Noble, Safiya Umoja. 2018. Algorithms of Oppression: How Search Engines Reinforce Racism. New York: NYU Press.

Noor, Taus. 2017. “Facial Recognition Using Deep Learning.” Towards Data Science, March 25, 2017. https://towardsdatascience.com/facial-recognition-using-deep-learning-a74e9059a150.

Ọnụọha, Mimi. 2018. Us, Aggregated 2.0. Accessed April, 6, 2022. https://mimionuoha.com/us-aggregated-20.

Onwuamaegbu, Natachi. 2021. “TikTok’s Black Dance Creators Are on Strike.” Washington Post, June 25, 2021. https://www.washingtonpost.com/lifestyle/2021/06/25/black-tiktok-strike.

Pabst, Anatola Araba. 2021. “Afro Algorithms: Imagining new possibilities for race, technology, and the future through animated storytelling.” Patterns 2 (8). https://doi.org/10.1016/j.patter.2021.100327.

Perrigo, Billy. 2022. “Timnit Gebru on Not Waiting for Big Tech to Fix AI.” Time, January 18, 2022. https://time.com/6132399/timnit-gebru-ai-google.

Roth, Lorna. 2009. “Looking at Shirley, the Ultimate Norm: Colour BALANCE, IMAGE Technologies, and Cognitive Equity.” Canadian Journal of Communication 34 (1): 111–36. https://doi.org/10.22230/cjc.2009v34n1a2196.

Sawalich, William. 2021. “Photoshop’s Astounding Neural Filters.” Digital Photo Magazine, June 14, 2021. https://www.dpmag.com/how-to/tip-of-the-week/photoshops-astounding-neural-filters.

Schwendener, Martha. 2022. “Rashaad Newsome Pulls out All the Stops.” New York Times, February 24, 2022. https://www.nytimes.com/2022/02/24/arts/rashaad-newsome-assembly-exhibit.html.

Simon, Matt. 2020. “AI Magic Makes Century-Old Films Look New.” Wired, August 12, 2020. https://www.wired.com/story/ai-magic-makes-century-old-films-look-new.

singh, sava saheli. 2022. #tresdancing. March 9, 2022. YouTube video, 21:58. https://www.youtube.com/watch?v=aUivOlVpcWA&ab_channel=ScreeningSurveillance.

Smith, David. 2013. “‘Racism’ of Early Colour Photography Explored in Art Exhibition.” The Guardian, January 25, 2013. https://www.theguardian.com/artanddesign/2013/jan/25/racism-colour-photography-exhibition.

Smith, Roberta. 2008. “A Hot Conceptualist Finds the Secret of Skin.” New York Times, September 5, 2008. https://www.nytimes.com/2008/09/05/arts/design/05stud.html.

Solly, Meilan. 2019. “Art Project Shows Racial Biases in Artificial Intelligence System.” Smithsonian Magazine, September 24, 2019. https://www.smithsonianmag.com/smart-news/art-project-exposed-racial-biases-artificial-intelligence-system-180973207.

Statt, Nick. 2019. “See How an AI System Classifies You Based on Your Selfie.” The Verge, September 16, 2019. https://www.theverge.com/tldr/2019/9/16/20869538/imagenet-roulette-ai-classifier-web-tool-object-image-recognition.

Surveillance Studies Centre. n.d. “sava saheli singh.” Accessed April, 6, 2022. https://www.sscqueens.org/people/sava-saheli-singh.

van Leeuwenstein, Jip. n.d. Jip van Leeuwenstein. Accessed April, 6, 2022. http://www.jipvanleeuwenstein.nl.

Weyrich, Tim, Henrik Wann Jensen, Markus Gross, Wojciech Matusik, Hanspeter Pfister, Bernd Bickel, Craig Donner, et al. 2006. “Analysis of Human Faces Using a Measurement-Based Skin Reflectance Model.” ACM Transactions on Graphics 25 (3): 1013–24. https://doi.org/10.1145/1141911.1141987.

Wiley, Kehinde. n.d. Kehinde Wiley Studio. Accessed April, 6, 2022. https://kehindewiley.com/works.

Wilson, Mark. 2020. “Can You Trick This AI into Thinking You’re Someone You’re Not?” Fast Company, December 2, 2020. https://www.fastcompany.com/90581252/can-you-trick-this-ai-into-making-money-with-your-face.

Wong, Julia Carrie. 2019. “Google Reportedly Targeted People with ‘Dark Skin’ to Improve Facial Recognition.” The Guardian, October 3, 2019. https://www.theguardian.com/technology/2019/oct/03/google-data-harvesting-facial-recognition-people-of-color.

Yup, Kayla. 2022. “Yale Professors Confront Racial Bias in Computer Graphics.” Yale Daily News, March 9, 2022. https://yaledailynews.com/blog/2022/03/09/yale-professors-confront-racial-bias-in-computer-graphics.